So, what is Argo's set of applications:

Argo is a collection of open-source tools under the Cloud Native Computing Foundation (CNCF) umbrella that focus on running and managing workflows and applications on Kubernetes,

ArgoCD:

- Purpose: ArgoCD is a declarative, GitOps continuous delivery tool

- How it works: With ArgoCD, application definitions, configurations, and environments are specified in a Git repository. When the repository changes, ArgoCD detects the difference between the desired application state (as defined in the Git repo) and the current state in the cluster, and it takes the necessary actions to align the two states.

Argo Workflows:

- Purpose: Argo Workflows is a Kubernetes-native workflow engine for executing sequences of tasks—processing pipelines or directed acyclic graphs (DAGs) of tasks—on Kubernetes.

- How it works: Users define workflows using YAML, and Argo ensures that these tasks are executed on the cluster in the correct order. It's often used for machine learning pipelines, data processing, CI/CD, and batch jobs.

Argo Events:

- Purpose: Argo Events is an event-driven framework that helps to trigger Kubernetes workflows or any other Kubernetes resources.

- How it works: It listens to various event sources (like webhooks, message queues, cloud events, etc.), processes those events, and based on those, can trigger specified Kubernetes actions (like starting a workflow).

Using Argo Events & Argo Workflow to drive CI/CD along with ArgoCD and GitHub Actions.

Currently, we have a service running in our K8 cluster, the service is built using GitHub actions and deployed using ArgoCD. This is still in our pre-prod environment and we want to add some tests once it's deployed.

The problem is, currently it can take anywhere from 3 to 5 minutes for ArgoCD to deploy the changes once the image has been pushed to Docker Hub, so how can I reliably and effectively kick off our K6 browser and API test against the newly deployed code in staging.

I hope that's where Argo Events with Argo Workflow will come in. The theory is that we have a workflow that kicks off some testing, this will be triggered by Argo Events watching for build in GitHub and maybe forcing the ArgoCD deployment or again watching for deployment. This post will follow through as I nut this out and deploy everything needed to our K8 Control Cluster.

For background:

ArgoCD: https://github.com/argoproj/argo-cd / https://argoproj.github.io/cd/

Argo Events: https://github.com/argoproj/argo-events / https://argoproj.github.io/events/

Argo Workflow: https://github.com/argoproj/argo-workflows / https://argoproj.github.io/workflows/

Before we start, why Argo, it is free and works perfectly with K8s so why not? I won't go through the setup of ArgoCD or the K8 Cluster in this post.

Argo Events Deployment

First I will create my Hel chart where I will list argo-events as dependency and then configure it using the values file:

File/folder structure:

argocd-apps/

staging/

argocd.yaml

argo-stack-control.yaml

...

helm-charts/

agrocd/

argo-events/

staging/

values-control.yaml

Chart.yaml

argo-workflows/

staging/

values-control.yaml

Chart.yaml

...The content of argo-events/Chart.yaml will be simple:

apiVersion: v2

name: argo-events

version: 1.0.0

dependencies:

- name: argo-events

version: 2.4.1

repository: https://argoproj.github.io/argo-helm

I just declared the argo-events repo, the chart's name, and the chart version in my dependencies. Note the version: 1.0.0 is the version of my chart not the chart of argo-events.

Next is values, I have all my values files split into cluster and environment, as this will only be deployed on the control cluster of any environment (currently staging) the file name is values-control.yaml, you don't need to do this way and I am sure there are many different ways of doing it.

I start by getting all the default values from the repo https://github.com/argoproj/argo-helm/blob/main/charts/argo-events/values.yaml and use what I think I need or want to customise.

The content to staging/values-control.yaml :

argo-events:

# -- Provide a name in place of `argo-events`

nameOverride: argo-events

## Custom resource configuration

crds:

# -- Install and upgrade CRDs

install: true

global:

# -- Mapping between IP and hostnames that will be injected as entries in the pod's hosts files

hostAliases: []

# - ip: 10.20.30.40

# hostnames:

# - git.myhostname

## Argo Events controller

controller:

# -- Argo Events controller name string

name: controller-manager

rbac:

# -- Create events controller RBAC

enabled: true

# -- Restrict events controller to operate only in a single namespace instead of cluster-wide scope.

namespaced: false

# -- The number of events controller pods to run.

replicas: 1

# -- Environment variables to pass to events controller

env: []

# - name: DEBUG_LOG

# value: "true"

## Events controller metrics configuration

metrics:

# -- Deploy metrics service

enabled: true

serviceMonitor:

# -- Enable a prometheus ServiceMonitor

enabled: true

## Argo Events admission webhook

webhook:

# -- Enable admission webhook. Applies only for cluster-wide installation

enabled: true

# -- Environment variables to pass to event controller

# @default -- `[]` (See [values.yaml])

env: []

# - name: DEBUG_LOG

# value: "true"

# -- Port to listen on

port: 443

So I have left the basics of what I think I will change later i.e. environment vars so I can adjust logging if needed, port of the webhook etc. To be honest there is almost nothing there that needs changing straight away.

Next is to add Argo-Events to an applicationSet, if you are not sure of what this is, then I suggest having a read of ArgoCD docs, but basically, it's just a way to tell ArgoCD what to deploy.

The content to argocd-apps/staging/argo-stack-control.yaml will be simple:

apiVersion: argoproj.io/v1alpha1

kind: ApplicationSet

metadata:

name: argo-stack-control-cluster

namespace: argocd # Namespace in which ArgoCD is deployed

spec:

generators:

- list:

elements:

# Argo-Events

- appName: argo-event

namespace: argo-events

template:

metadata:

name: "{{appName}}"

labels:

owner: platform

type: common-stack

spec:

project: common-stack

source:

repoURL: https://github.com/some-repos/helm.git

targetRevision: argo-events

path: "helm-charts/{{appName}}"

helm:

ignoreMissingValueFiles: true

valueFiles:

- staging/values-control.yaml

destination:

name: in-cluster #This need to match the 'name' of the cluster (and needs to exists already in ArgoCD)

namespace: "{{namespace}}"

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- CreateNamespace=true

- ServerSideApply=true

Now I commit and push to GitHub, not that I have listed targetRevision as argo-events this is just the branch I am working on.

Once pushed I then apply the applicationSet to the cluster using kubectl apply -f argocd-apps/staging/argo-stack-control.yaml

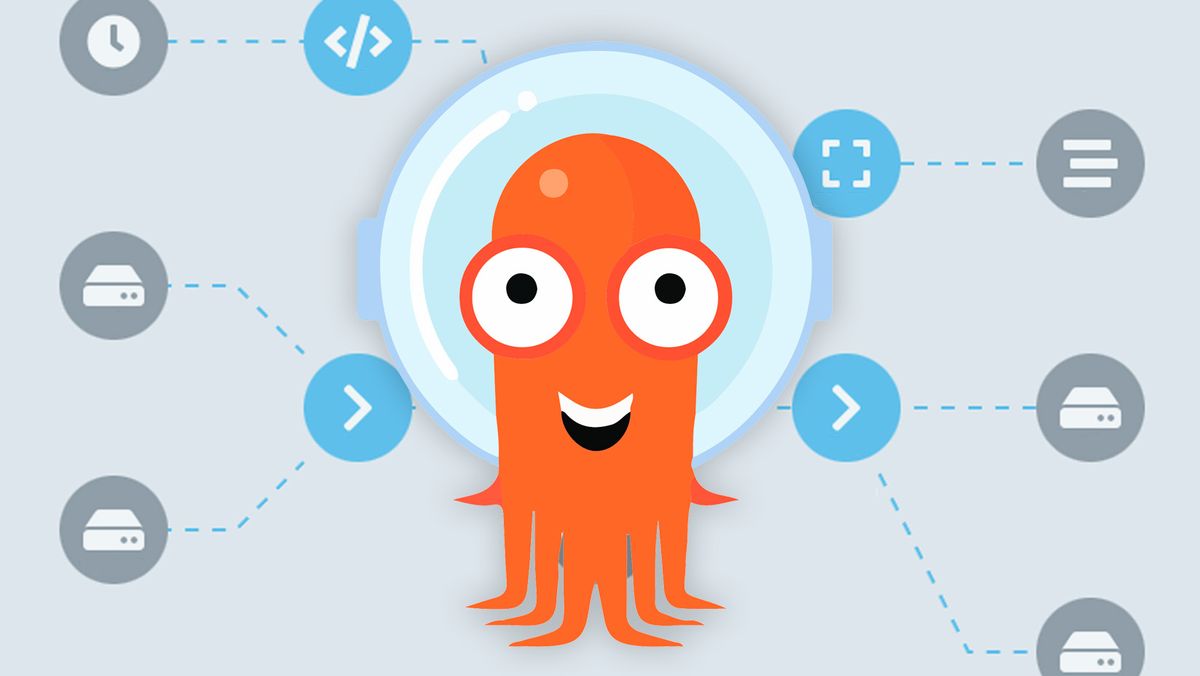

I can then check the progress of the deploying in my ArgoCD UI:

So what have we deployed:

- Sensor Custom Resource Definition - allows for Sensors to be defined on the K8 cluster

- EventSource Custom Resource Definition - allows for Event source to be defined on the K8 cluster

- EventBus Custom Resource Definition - allows for EventBuses to be defined on the K8 cluster

- Controller Deployment

- Validation Webhook Deployment

- Service Accounts

- Roles / Cluster Roles

- Role Bindings / Cluster Role Bindings

What does that all mean, well we will get to that later in the post!

Argo Workflow Deployment

Now that we have events deployed, we can move on to workflow, and we repeat the process of Argo events.

The content argo-workflows/Chart.yaml will be simple:

apiVersion: v2

name: argo-workflows

version: 1.0.0

dependencies:

- name: argo-workflows

version: 0.33.3

repository: https://argoproj.github.io/argo-helm

I start by getting all the default values from the repo https://github.com/argoproj/argo-helm/blob/main/charts/argo-workflows/values.yaml and use what I think I need or want to customise.

The content to argo-workflows/staging/values-control.yaml :

argo-workflows:

## Custom resource configuration

crds:

# -- Install and upgrade CRDs

install: true

# -- String to partially override "argo-workflows.fullname" template

nameOverride: argo-workflows

workflow:

serviceAccount:

# -- Specifies whether a service account should be created

create: true

# -- Service account which is used to run workflows

name: "argo-workflow"

rbac:

# -- Adds Role and RoleBinding for the above specified service account to be able to run workflows.

# A Role and Rolebinding pair is also created for each namespace in controller.workflowNamespaces (see below)

create: true

controller:

metricsConfig:

# -- Enables prometheus metrics server

enabled: true

# -- Path is the path where metrics are emitted. Must start with a "/".

path: /metrics

# -- Port is the port where metrics are emitted

port: 9090

# -- Number of workflow workers

workflowWorkers: 15

# telemetryConfig controls the path and port for prometheus telemetry. Telemetry is enabled and emitted in the same endpoint

# as metrics by default, but can be overridden using this config.

telemetryConfig:

# -- Enables prometheus telemetry server

enabled: true

serviceMonitor:

# -- Enable a prometheus ServiceMonitor

enabled: true

# -- Workflow controller name string

name: workflow-controller

# -- Specify all namespaces where this workflow controller instance will manage

# workflows. This controls where the service account and RBAC resources will

# be created. Only valid when singleNamespace is false.

workflowNamespaces:

- argo-workflow

logging:

# -- Set the logging level (one of: `debug`, `info`, `warn`, `error`)

level: info

# -- Set the glog logging level

globallevel: "0"

# -- Set the logging format (one of: `text`, `json`)

format: "text"

# -- Service type of the controller Service

serviceType: ClusterIP

# -- The number of controller pods to run

replicas: 1

clusterWorkflowTemplates:

# -- Create a ClusterRole and CRB for the controller to access ClusterWorkflowTemplates.

enabled: true

# -- Extra containers to be added to the controller deployment

# mainContainer adds default config for main container that could be overriden in workflows template

server:

# -- Deploy the Argo Server

enabled: true

# -- Value for base href in index.html. Used if the server is running behind reverse proxy under subpath different from /.

## only updates base url of resources on client side,

## it's expected that a proxy server rewrites the request URL and gets rid of this prefix

## https://github.com/argoproj/argo-workflows/issues/716#issuecomment-433213190

baseHref: /

# -- Server name string

name: server

# -- Service type for server pods

serviceType: ClusterIP

# -- Service port for server

servicePort: 2746

replicas: 1

## Argo Server Horizontal Pod Autoscaler

# -- Run the argo server in "secure" mode. Configure this value instead of `--secure` in extraArgs.

## See the following documentation for more details on secure mode:

## https://argoproj.github.io/argo-workflows/tls/

secure: false

logging:

# -- Set the logging level (one of: `debug`, `info`, `warn`, `error`)

level: info

# -- Set the glog logging level

globallevel: "0"

# -- Set the logging format (one of: `text`, `json`)

format: "text"

## Ingress configuration.

# ref: https://kubernetes.io/docs/user-guide/ingress/

ingress:

# -- Enable an ingress resource

enabled: true

ingressClassName: "nginx"

# -- List of ingress hosts

## Hostnames must be provided if Ingress is enabled.

## Secrets must be manually created in the namespace

hosts:

- workflows.staging.wonderphiltech.io

# -- List of ingress paths

paths:

- /

# -- Ingress path type. One of `Exact`, `Prefix` or `ImplementationSpecific`

pathType: Prefix

# -- Ingress TLS configuration

tls:

- hosts:

- workflows.staging.wonderphiltech.io

secretName: argo-workflows-secret

clusterWorkflowTemplates:

# -- Create a ClusterRole and CRB for the server to access ClusterWorkflowTemplates.

enabled: true

# -- Give the server permissions to edit ClusterWorkflowTemplates.

enableEditing: true

# SSO configuration when SSO is specified as a server auth mode.

sso:

# -- Create SSO configuration

## SSO is activated by adding --auth-mode=sso to the server command line.

enabled: true

# -- The root URL of the OIDC identity provider

issuer: https://github.com

clientId:

# -- Name of secret to retrieve the app OIDC client ID

name: argo-server-sso

# -- Key of secret to retrieve the app OIDC client ID

key: client-id

clientSecret:

# -- Name of a secret to retrieve the app OIDC client secret

name: argo-server-sso

# -- Key of a secret to retrieve the app OIDC client secret

key: client-secret

# - The OIDC redirect URL. Should be in the form <argo-root-url>/oauth2/callback.

redirectUrl: https://workflow.staging.wonderphiltech.io/oauth2/callback

rbac:

# -- Adds ServiceAccount Policy to server (Cluster)Role.

enabled: true

# -- Scopes requested from the SSO ID provider

## The 'groups' scope requests group membership information, which is usually used for authorization decisions.

scopes:

- repo

# - groups

# -- Override claim name for OIDC groups

customGroupClaimName: ""

# -- Specify the user info endpoint that contains the groups claim

## Configure this if your OIDC provider provides groups information only using the user-info endpoint (e.g. Okta)

userInfoPath: ""

# -- Skip TLS verification for the HTTP client

insecureSkipVerify: false

Then I update the applicationSet to include the workflow deployment:

The content to argocd-apps/staging/argo-stack-control.yaml will be simple:

apiVersion: argoproj.io/v1alpha1

kind: ApplicationSet

metadata:

name: argo-stack-control-cluster

namespace: argocd # Namespace in which ArgoCD is deployed

spec:

generators:

- list:

elements:

# Argo-Events

- appName: argo-events

namespace: argo-events

# Argo-Workflows

- appName: argo-workflows

namespace: argo-workflows

template:

metadata:

name: "{{appName}}"

labels:

owner: platform

type: common-stack

spec:

project: common-stack

source:

repoURL: https://github.com/some-repo/helm.git

targetRevision: argo-events

path: "helm-charts/{{appName}}"

helm:

ignoreMissingValueFiles: true

valueFiles:

- staging/values-control.yaml

destination:

name: in-cluster #This need to match the 'name' of the cluster (and needs to exists already in ArgoCD)

namespace: "{{namespace}}"

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- CreateNamespace=true

- ServerSideApply=true

Save everything, commit and push to GitHub. Then I reapply using the kubectl command:

kubectl apply -f argocd-apps/staging/argo-stack-control.yaml

Now, from the above config, I had some issues, and they were:

- Ingress was not generating a certificate, so I had to add the

annotations:

kubernetes.io/ingress.class: nginx

cert-manager.io/cluster-issuer: letsencrypt-staging-wpt2. SSO is not working. I hate it when companies have two products but can use the same way of doing things and in this case, SSO, so I could not copy or reuse the config I have for ArgoCD SSO. In fact when I turned auth mode to sso it turned off authentication completely, so I am going to come back to that at a later stage.

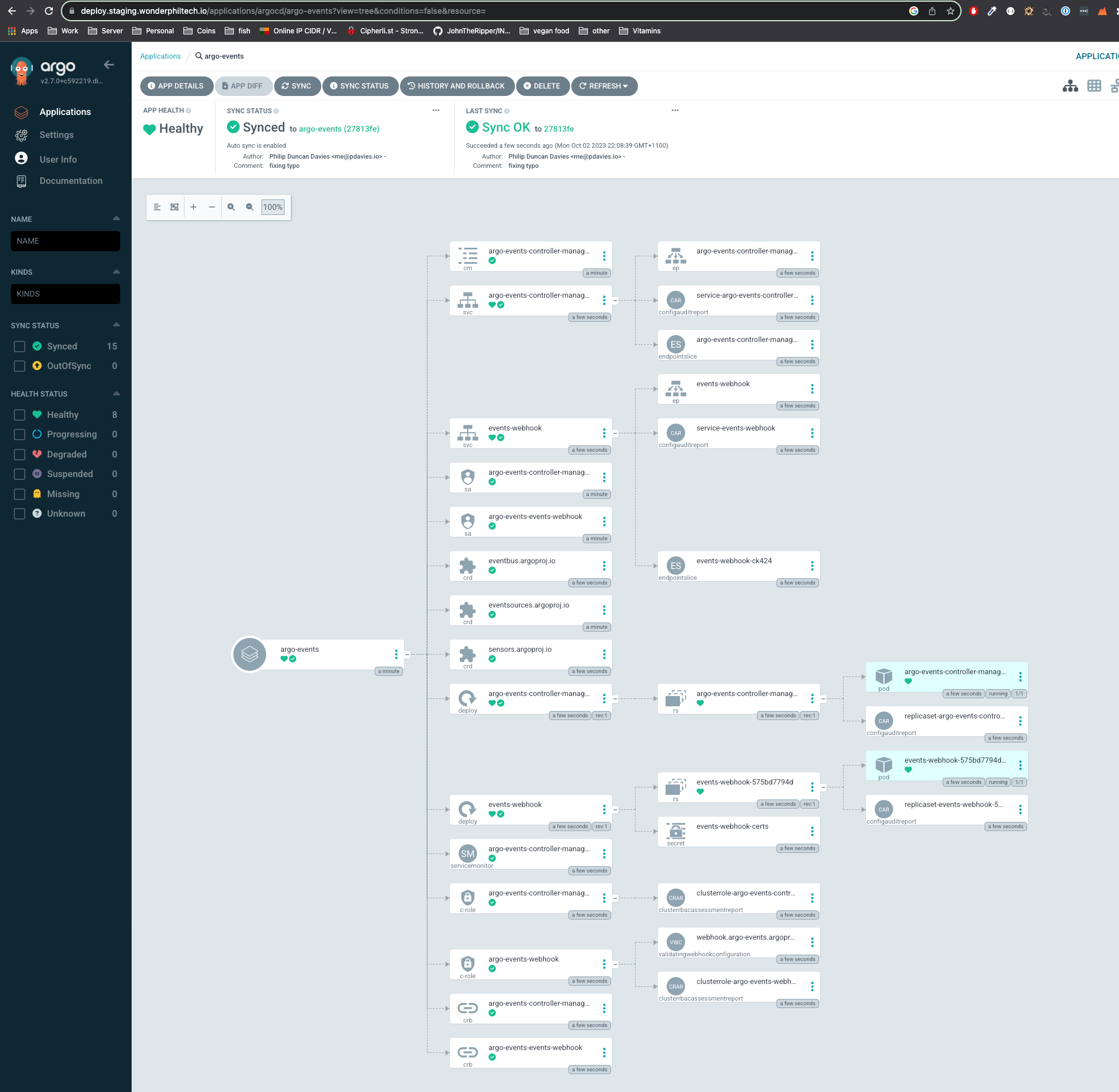

I can now go to the Workflow URL and I can see it up and running:

Next Post I will go through setting up a workflow that includes sensors and triggers!