In this post I am going to cover deploying a MinIO Tenant, this is the layer that allows the s3 API and buckets to be generated.

If you haven't set up MinIO Operator yet, you can follow Part 1 of this and get all set up, and this post will assume that you have.

Background

So this should be simple, but the documentation while all there is not organized in the greatest way and made things difficult when trying to configure everything.

Basically, I want to deploy a MinIO tenant via helm charts using ArgoCD and not using the k8 MinIO plugin cmd.

The Tenant I want to build will be for long-term storage for my observability stack, there is a few things this post won't cover to make it fully Production Grade and secure e.g. setting up KMS and server-side encryption.

Helm Chart

First, we create a chart and list the MinIOs tenant chart as a dependency.

helm-charts/minio-tenant-observability

apiVersion: v2

name: minio-observability-storage

home: https://min.io

icon: https://min.io/resources/img/logo/MINIO_wordmark.png

version: 0.0.0

appVersion: 0.0.0

type: application

description: A Helm chart for MinIO tenent

dependencies:

- name: tenant

repository: https://operator.min.io/

version: 5.0.6

Pretty simple, next is going to be the values file and this is where it became a little annoying to do and I explain why as I go through:

helm-charts/minio-tenant-observability/staging/values-control.yaml

First part: (will give complete file below)

tenant:

# Tenant name

name: minio-observability-staging

## Secret name that contains additional environment variable configurations.

## The secret is expected to have a key named config.env containing environment variables exports.

configuration:

name: minio-observability-staging-env-configuration

pools:

- servers: 4

name: pool-0

volumesPerServer: 4

## size specifies the capacity per volume

size: 30Gi

## storageClass specifies the storage class name to be used for this pool

storageClassName: ext-nas-storage-class

securityContext:

runAsUser: 100

runAsGroup: 1000

runAsNonRoot: true

fsGroup: 1000

metrics:

enabled: true

port: 9000

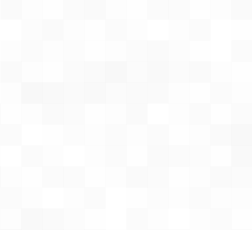

protocol: httpconfiguration.namethis is a secret that should be created beforehand and includes a username and password, so should be created in a secure method.poolsthere is a lot of configuration that you can use here and if you have used the MinIO console to create a Tenant and are now trying to replicate it into Helm the main thing to note is, in the console by default the console will use "Default" pod placement, this is fine if you have 4 usable k8 nodes, but in my case I have 2 API nodes and 2 normal nodes. So in the helm values, you need to make sure you do not have anynodeSelectororaffinityconfig otherwise the pods will not start.

3. securityContext this was important for my setup and may not be an issue for you. Our storage server would only allow for files/folders to be written with a user called _apt which for us the UID was 100 so this needed to be updated, otherwise, everything would start but you would have a lot of logs complaining that permission was denied.

The last part has everything to do with exposing the Tenant API and Console and this caused me the most trouble to figure out. As I said the docs are there but not so obvious, I didn't find the docs that I needed on their "how to install" web page, but I found them in the git repo. (I think this a bit better than the web page as well)

features:

bucketDNS: true

domains:

console: https://s3-console.staging.domain.io

minio:

- https://s3.staging.domain.io

buckets:

- name: observability-staging

region: eu-west-1

# Tenant scrape configuration will be added to prometheus managed by the prometheus-operator.

prometheusOperator: true

ingress:

api:

enabled: true

labels: {}

annotations:

cert-manager.io/cluster-issuer: letsencrypt-staging-wpt

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

nginx.ingress.kubernetes.io/rewrite-target: /

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/server-snippet: |

client_max_body_size 0;

nginx.ingress.kubernetes.io/configuration-snippet: |

chunked_transfer_encoding off;

tls:

- secretName: minio-tenant-observability-general-tls

hosts:

- s3.staging.domain.io

host: s3.staging.domain.io

path: /

pathType: Prefix

console:

enabled: true

labels: {}

annotations:

cert-manager.io/cluster-issuer: letsencrypt-staging-wpt

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

nginx.ingress.kubernetes.io/rewrite-target: /

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/server-snippet: |

client_max_body_size 0;

nginx.ingress.kubernetes.io/configuration-snippet: |

chunked_transfer_encoding off;

tls:

- secretName: minio-tenant-observability-con-general-tls

hosts:

- s3-console.staging.domain.io

host: s3-console.staging.domain.io

path: /

pathType: Prefixfeatures.doaminsthis is really important, if you do not define these and still define the ingress later nothing will work. This tells the MinIO app to expect and use these domains for requests. Otherwise, it will only work locally in the cluster.ingress.api.annotationsandingress.console.annotationshere was my pain point. For ingress to work this is the most important part. If you do not have

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS" nginx.ingress.kubernetes.io/rewrite-target: / nginx.ingress.kubernetes.io/proxy-body-size: "0" nginx.ingress.kubernetes.io/server-snippet: |

client_max_body_size 0;

nginx.ingress.kubernetes.io/configuration-snippet: |

chunked_transfer_encoding off;than you can kiss goodbye anything being accessible. Without these, you get a heap of errors and the main one is HTTP status code 400, if you curl it, it tells you that you are sending HTTP to an HTTPS server. So basically terminating SSL at the ingress controller. Now there is more config that could be done according to the docs in Github around configuring the TLS to use cert-manager and let's encrypt, but this seems to be working for me without it.

The complete values file looks like this:

tenant:

tenant:

# Tenant name

name: minio-observability-staging

## Secret name that contains additional environment variable configurations.

## The secret is expected to have a key named config.env containing environment variables exports.

configuration:

name: minio-observability-staging-env-configuration

pools:

- servers: 4

name: pool-0

volumesPerServer: 4

## size specifies the capacity per volume

size: 30Gi

## storageClass specifies the storage class name to be used for this pool

storageClassName: ext-nas-storage-class

securityContext:

runAsUser: 100

runAsGroup: 1000

runAsNonRoot: true

fsGroup: 1000

metrics:

enabled: true

port: 9000

protocol: http

features:

bucketDNS: true

domains:

console: https://s3-console.staging.domain.io

minio:

- https://s3.staging.domain.io

buckets:

- name: observability-staging

region: eu-west-1

# Tenant scrape configuration will be added to prometheus managed by the prometheus-operator.

prometheusOperator: true

ingress:

api:

enabled: true

labels: {}

annotations:

cert-manager.io/cluster-issuer: letsencrypt-staging

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

nginx.ingress.kubernetes.io/rewrite-target: /

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/server-snippet: |

client_max_body_size 0;

nginx.ingress.kubernetes.io/configuration-snippet: |

chunked_transfer_encoding off;

tls:

- secretName: minio-tenant-observability-general-tls

hosts:

- s3.staging.domain.io

host: s3.staging.domain.io

path: /

pathType: Prefix

console:

enabled: true

labels: {}

annotations:

cert-manager.io/cluster-issuer: letsencrypt-staging

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

nginx.ingress.kubernetes.io/rewrite-target: /

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/server-snippet: |

client_max_body_size 0;

nginx.ingress.kubernetes.io/configuration-snippet: |

chunked_transfer_encoding off;

tls:

- secretName: minio-tenant-observability-con-general-tls

hosts:

- s3-console.staging.domain.io

host: s3-console.staging.domain.io

path: /

pathType: Prefix

ArgoCD ApplicationSet

Next, we add to our Application Set so that it can be deployed. As this is going to be part of my observability stack I just adding it to an existing ApplicationSet.

apiVersion: argoproj.io/v1alpha1

kind: ApplicationSet

metadata:

name: prometheus-control

namespace: argocd

annotations:

argocd.argoproj.io/sync-options: Prune=false

argocd.argoproj.io/sync-wave: \"1\"

spec:

generators:

- list:

elements:

- name: kube-prometheus-stack

path: helm-charts/kube-prometheus-stack

namespace: observability

- name: minio-tenant-observability

path: helm-charts/minio-tenant-observability

namespace: minio-tenant-observability

# Promtail

# - name: grafana-promtail

# namespace: observability

# path: helm-charts/grafana-promtail

# # Loki

# - name: grafana-loki

# namespace: observability

# path: helm-charts/grafana-loki

# # Mimir

# - name: grafana-mimir

# namespace: observability

# path: helm-charts/grafana-mimir

template:

metadata:

name: "{{name}}-control"

annotations:

argocd.argoproj.io/sync-wave: \"1\"

spec:

project: default

source:

repoURL: https://github.com/someuser/helm.git

targetRevision: main

path: \"{{path}}\"

helm:

ignoreMissingValueFiles: false

valueFiles:

- \"staging/values-control.yaml\"

destination:

server: \"https://kubernetes.default.svc\"

namespace: "{{namespace}}"

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- CreateNamespace=true

- ServerSideApply=true

At this point, we are ready to deploy. Do a git add commit and push and ArgoCD should deploy in no time.

Configuration Secret

So before we check how ArgoCD deployment has gone, I want to just over the Configuration Secret that I touched base on before. As I said it has a username and password so I don't add to my Helm charts.

Basically, it needs to have the following:

export MINIO_BROWSER="on"

export MINIO_ROOT_USER="some_user_name"

export MINIO_ROOT_PASSWORD="Strong_password"

export MINIO_STORAGE_CLASS_STANDARD="EC:4"

All it is is Environment Vars that will be exported to the containers. I create it using the following object:

apiVersion: v1

kind: Secret

metadata:

name: minio-observability-staging-env-configuration

namespace: minio-tenant-observability

data:

config.env: >-

Xhwb3J0IE1JTklPX0JST1dTRVI9b24KZXhwb3J0IE1JTklPX1JPT1RfVVNFUj1zb21lX3VzZXJfbmFtZQpleHBvcnQgTUlOSU9fUk9PVF9QQVNTV09SRD1TdHJvbmdfcGFzc3dvcmQKZXhwb3J0IE1JTklPX1NUT1JBR0VfQ0xBU1NfU1RBTkRBUkQ9RUM6NAoK

type: Opaque

Lets Check

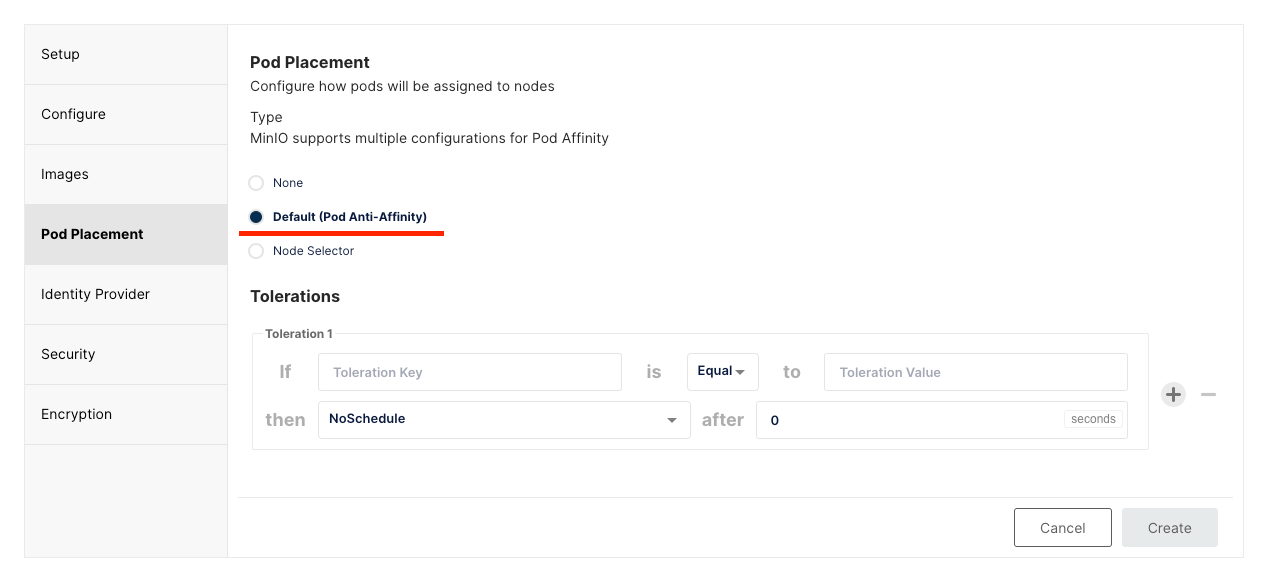

Now in ArgoCD, you should be seeing your Tenant deployed.

The only thing I still working out is why even though everything is deployed and up and running, ArgoCD still thinks it is "Progressing" It's annoying as hell, but to confirm more that everything is working we can check the console and try and use the s3 API.

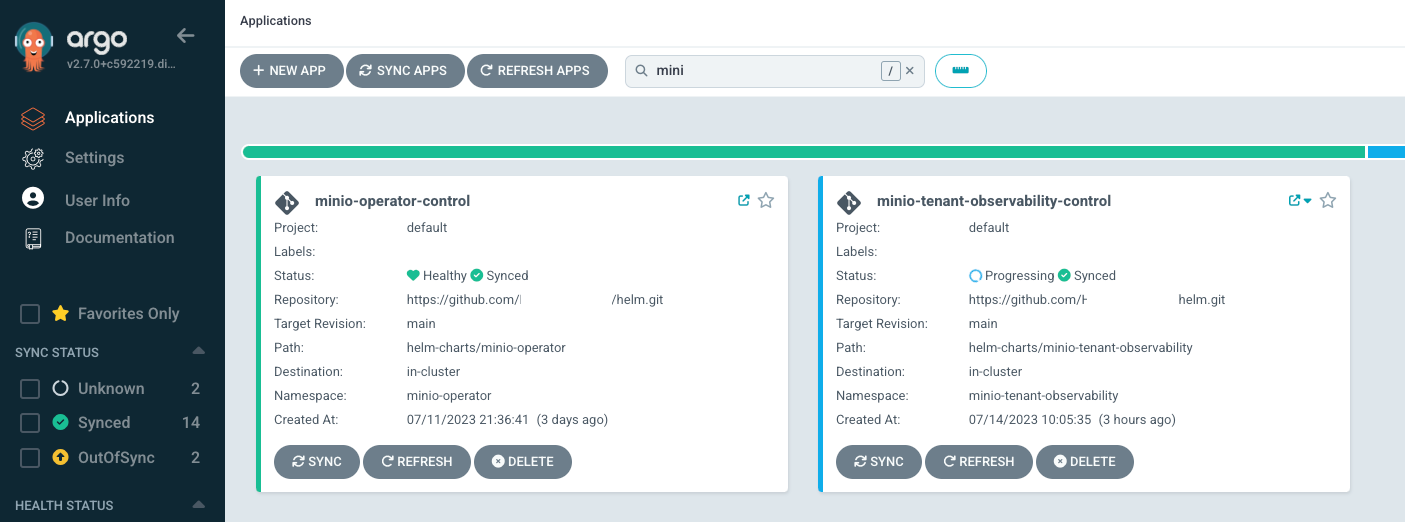

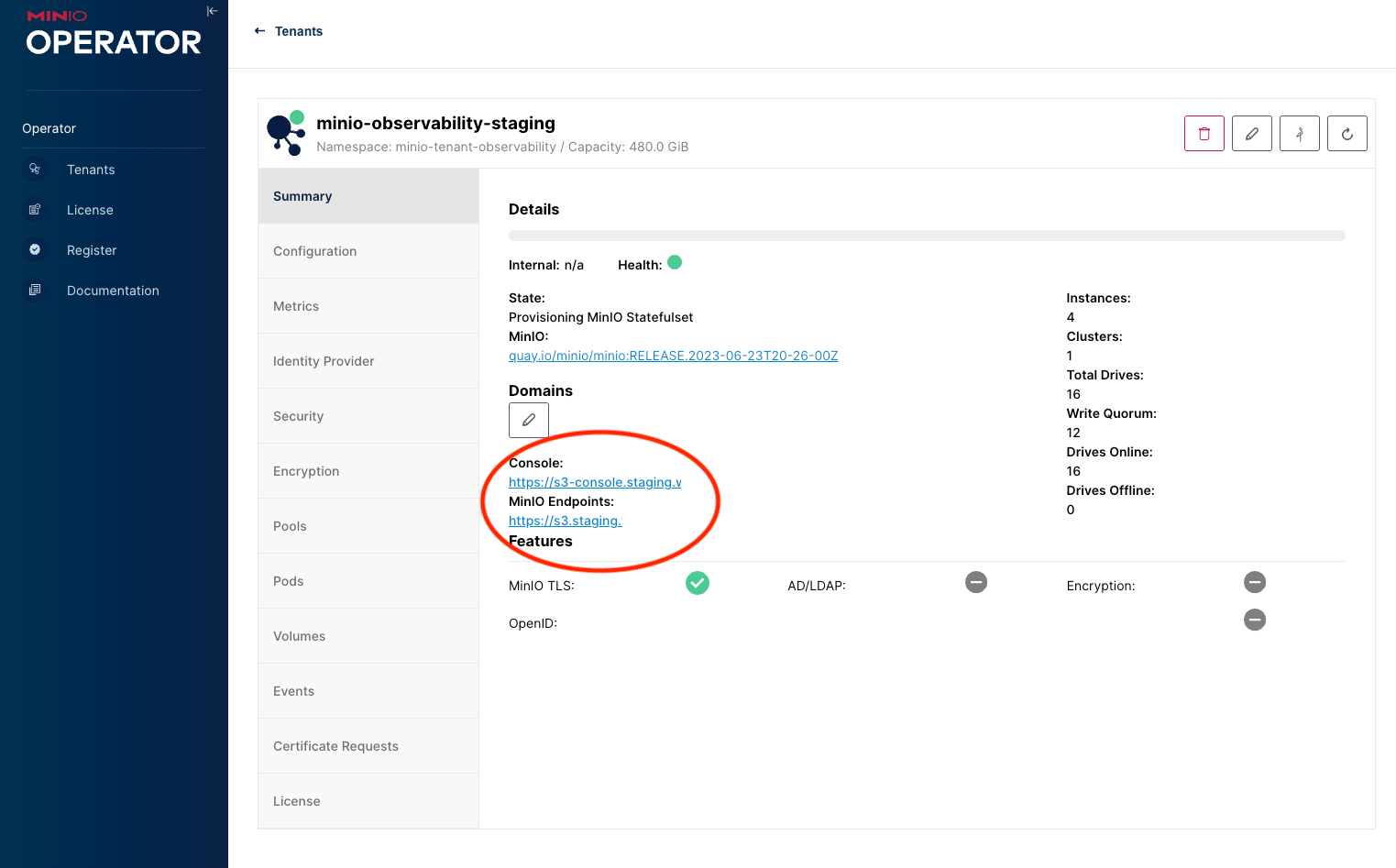

MinIO Operator Console

First, if you go to the console for the operator you should see your new Tenant and it should be all green!

Tenant Console

From the Operator console if you open your tenant you should be shown the URL's for accessing your tenant:

As long as your DNS is correctly configured, you should be able to open the Console URL and get the login page, using the creds from the secret that we create, we should be able to log in.

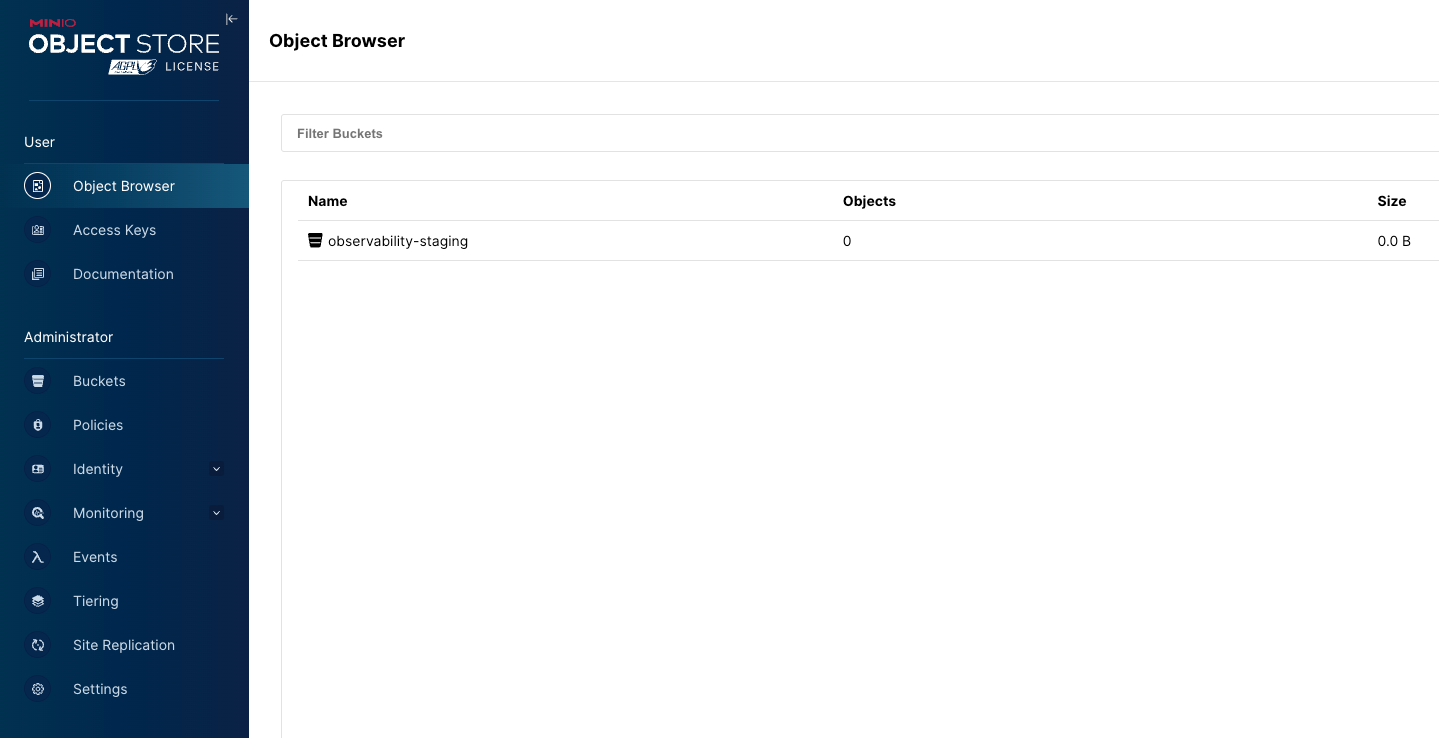

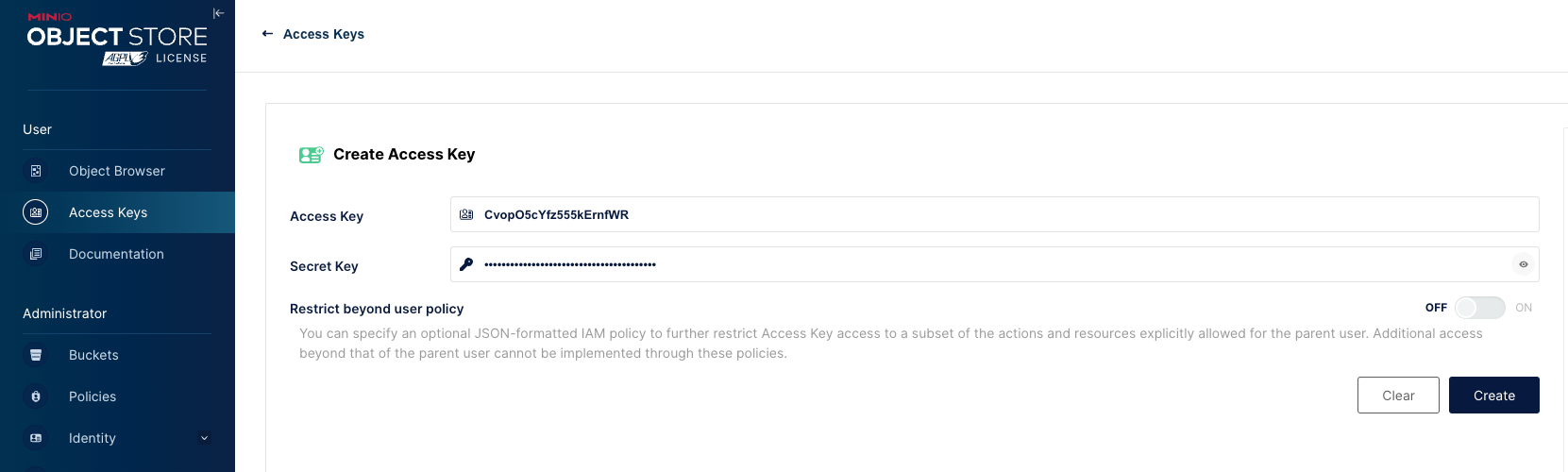

From here we can now create some access keys and then test the AWS CLI. Open Access Keys and generate a new pair

Just remember that you need to save this as it will not be visible again.

AWS CLI

Next, open your AWS Configuration file. I have setup profiles so I can work on many aws accounts so this may differ based on your needs. I created a profile called observability-staging:

~/.aws/config

[observability-staging]

region = eu-west-1

output = json

s3 = signature_version = s3v4Then with the access keys that were just created, I add that to my aws creds file, again under the same profile name observability-staging:

[observability-staging]

aws_access_key_id = some_key_id

aws_secret_access_key = some_access_keyNow to the terminal and let's test its working but running the following:

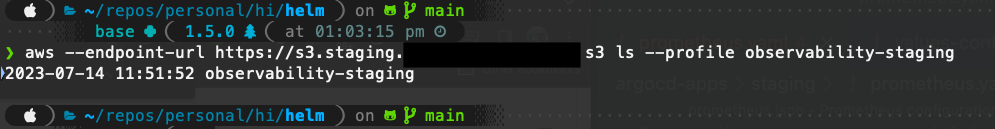

aws --endpoint-url https://s3.staging.domain.io s3 ls --profile observability-staging

From this you should see your bucket:

If you have any issues and these tests don't work, make sure you check the logs of the pods running your MinIO Tenant and that should lead you to the issue. Again there is more that could be done, like encryption but I probably cover that in another post. Any questions please pop them in below.